Loading data from kaggle directly into S3 is a two step process. In first step we configure Kaggle to be able to download. And in second step, we extract data from Kaggle into S3 bucket.

Get data from Kaggle

To get data from kaggle, we setup Kaggle command line tool and then generate an API token to get the data.

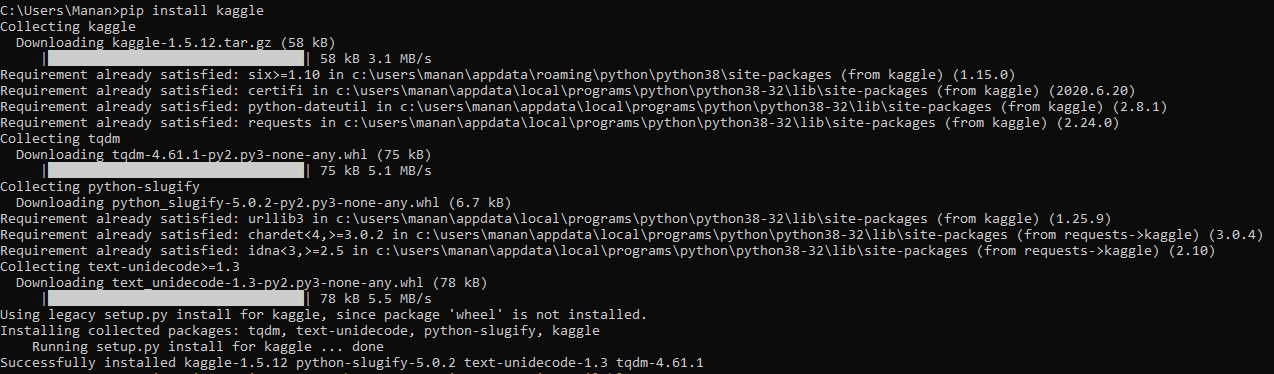

Setup Kaggle Command Line

To get data from Kaggle, we will install kaggle-cli.

pip install kaggle

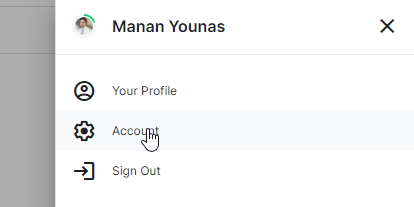

Create API tokens

From top right, click on your account name and then click on Account

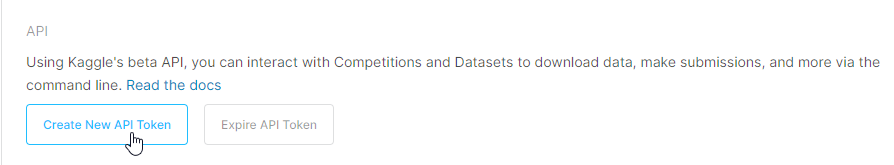

Account page has a panel for creating new Api token.

It will download kaggle.json which we can then use as a token. Move this file to Kaggle’s Environment folder. By default its in user’s home directory /.kaggle/

Download data on local

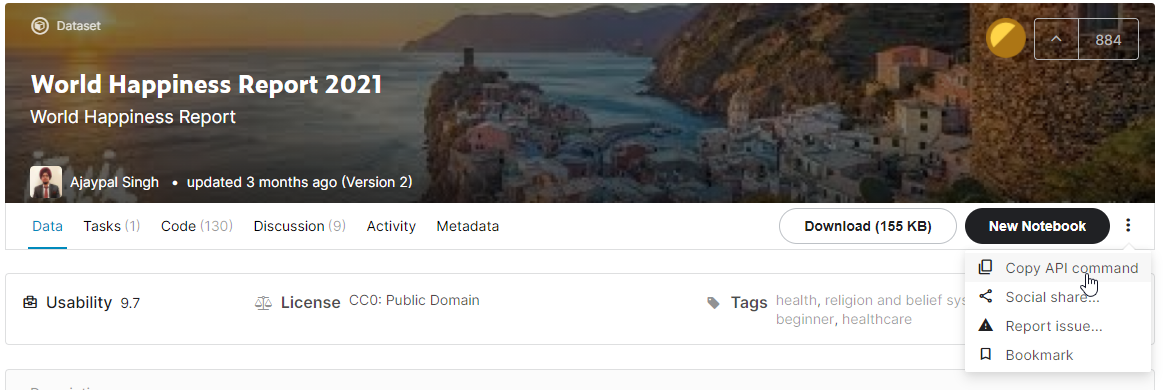

To demonstrate, I am using this dataset from Kaggle.

From the three dots on the right side, select the Copy API command.

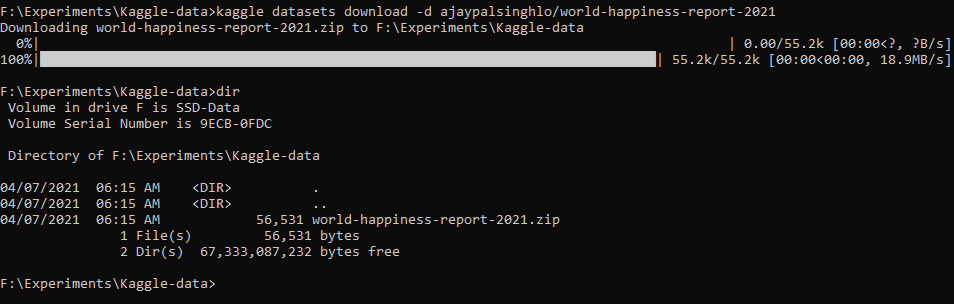

[su_box title=”Copy data” style=”glass” radius=”1″]kaggle datasets download -d ajaypalsinghlo/world-happiness-report-2021[/su_box]

This will download the file on your local desktop.

Copy data to S3

Setup AWS Command Line

To copy data from local desktop to AWS s3 bucket, AWS provides CLI tools. To use AWS CLI tools, we first need to generate aws access credentials. This can be done from AWS web console.

Generate S3 AWS secret

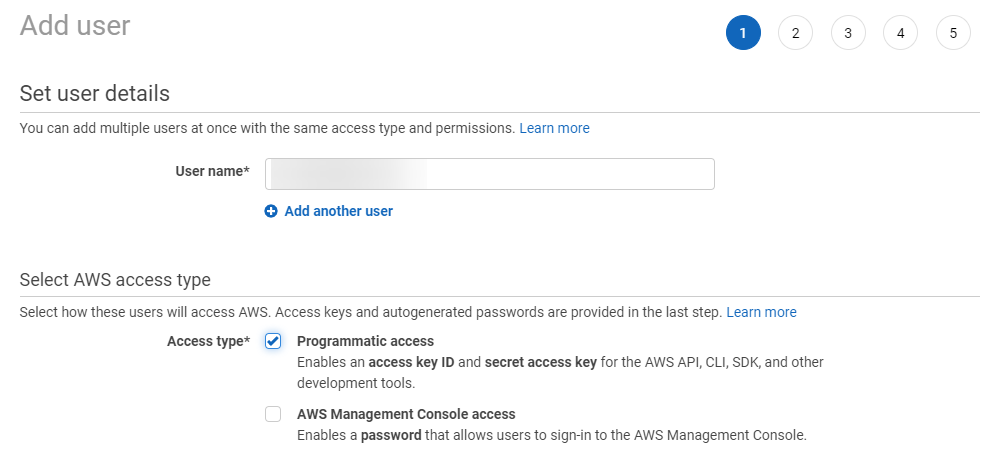

In the AWS S3 interface. Select IAM -> Users from services.

Click on Add User.

Check Programmatic access.

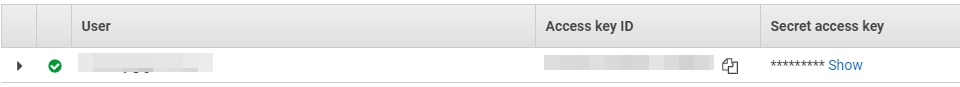

It will generate an access key id and secret access key.

Configure Keys in local system

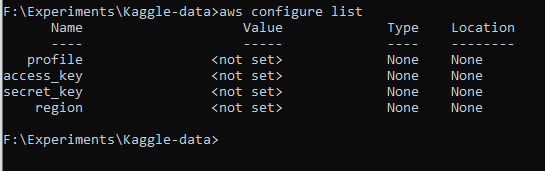

To check AWS key configuration, type :

aws configure list

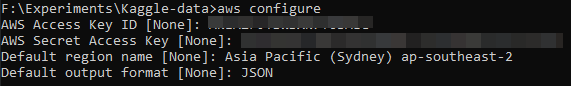

Use aws configure command to enter the key and secret from the previous step.

Succesful configuration will result in a configuration list that looks like the following :

Create S3 Bucket

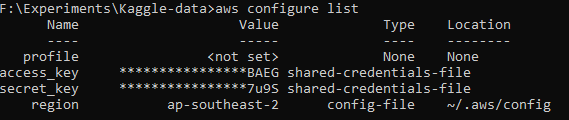

In the AWS console, select service S3 and click on Create Bucket.

URI to access the bucket is not displayed in the console. However, it’s s3://bucket name. In our case, it will be s3://world-happiness-data

Copy data from local to S3

Now that bucket is created and our CLI is configured, we can run the copy command.

aws s3 cp world-happiness-report-2021.zip s3://world-happiness-data

![]()

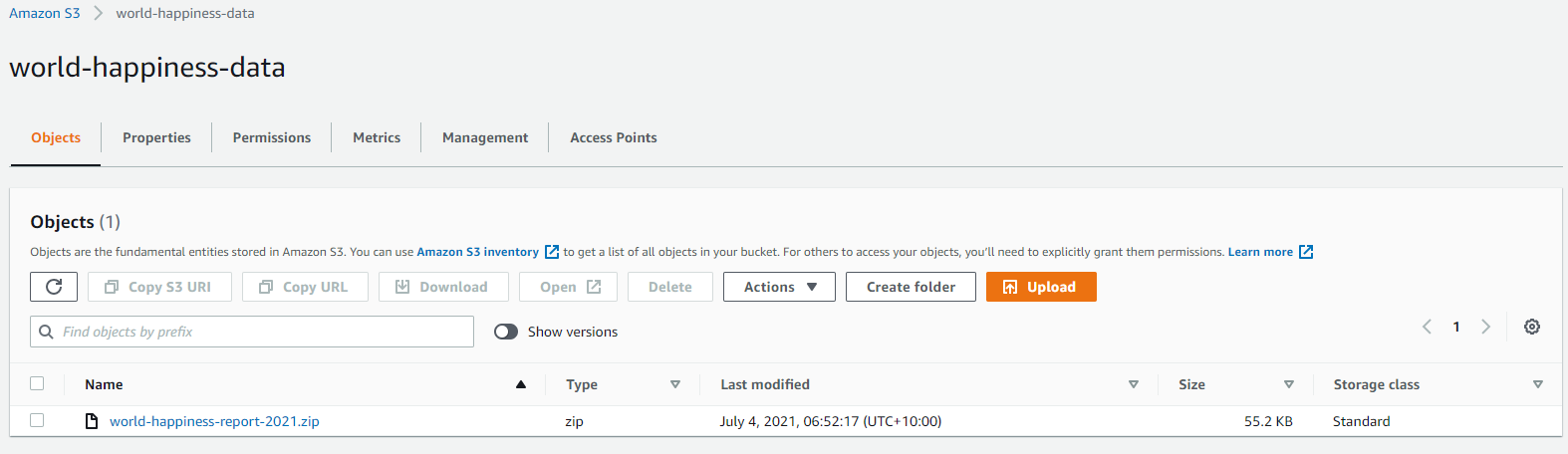

verifying upload

We can verify the upload by going to Amazon AWS console > S3 > Bucket name